As we’ve grown the company, we’ve learned that every service provider we depend on is a source of operational risk. We’re Enchant, and we build a SaaS product in the CRM space. This is a story of how a small oversight caused a lot of pain and how we try to minimize the impact of future similar events.

I was at my desk, listening to some jazz and working on a design doc.

My phone went off at full blast. Startled, I jumped in my seat and grabbed my phone.

Oh no! This wasn’t a phone call. It was an emergency alert.

Like a wartime siren, loud and obnoxious, designed to wake you up.

“Enchant down!” it said.

I knew this message. It was from our secondary monitoring system (yes, we have two). It monitors Enchant from outside our network, and gives us visibility on system health from the perspective of a customer.

The alert was telling me that the product was down for all of our customers. This was happening around 1pm in Toronto (or 10am in San Francisco). This is prime time - during business hours for ALL of North America.

My heart was racing. We needed to act fast.

Within the next minute, our primary monitoring system sent out more detailed alerts (as internal systems were failing) and the team sprung into action.

We run a critical service. Our customers use Enchant to communicate with their customers and demand 24/7/365 uptime. We’ve engineered the platform to be tough - redundant systems, rolling deploys, automatic failovers, load balancers, hot backups, and many other complicated technical things.

My point is: We prepared for this day. We did the risk analysis. We checked all the boxes. We had everything in place. So why are the alarms going off?! What is going on?!?!

I joined the team on the hunt. A number of our internal systems weren’t happy. Cascading failures were throwing us off the scent. When one system fails, other systems that talk to it also start failing. And when systems are interdependent on each other, it makes it difficult to figure out the root cause.

It took us a few minutes but we chased it down - our systems were experiencing huge delays communicating with one of the services we use from our hosting provider (ie S3 from Amazon Web Services (AWS)). This caused cascading backlogs on our end and brought Enchant to a crawl. To top it off, we couldn’t increase capacity at that moment because Amazon’s other internal systems were also affected and falling over.

We had prepared for S3 going down. What we hadn’t prepared for was S3 slowing down. This small oversight brought Enchant to a crawl.

I checked the AWS status website — nothing.

I checked the news — nothing.

I checked twitter — nothing.

I checked the AWS status website again — nothing!! c’mon guys!

I checked twitter again — aah wait.. i see something.

I saw tweets from other people also experiencing similar failures with AWS services. I also saw tweets about outages at other companies. A lot of companies depend on AWS for hosting… and in that moment, we were all feeling the pain.

Ok, so this was good news for us, kind of. It meant we knew what was going on. It also meant that we weren’t the root cause of this disaster. It meant that when I have to explain what happened to our customers, I’ll be able to point to the news and there will be news articles of Amazon taking out half the internet with their outage.

But waiting for Amazon to figure out they have a problem and then act on it wasn’t an option. Who knew how long that would take! They hadn’t even updated their own status website yet.

While Enchant uses S3 for some of it’s functionality, the service is not so critical that its misbehaviour should knock Enchant completely offline. I felt that we could work around this and get things up and running again. I made the decision - we’re going to tighten any code in any subsystem that has anything to do with S3. We should be able to get to a point where we’re back in operation.

We did this in two phases.

First, we turned off every feature that depended on S3. This was pushed out about an hour after the incident started. I watched the monitoring systems to confirm customers were logging back into Enchant and getting back to work.

But this wasn’t the real fix. We had just turned off some features completely.

So we got back to work. Enchant needed to be resilient to these kind of failures. We modified any code that touched S3 to anticipate communication delays and handle them better. These changes went out in another half an hour.

It was a waiting game at that point. We were operational, but in a partially degraded state. Our systems would self-heal the moment Amazon resolved the underlying issue with S3.

So I checked Amazon’s status site again… and yes, they had finally posted an update on their status site letting us know that something is wrong with S3. Ironically, the S3 failure had prevented them from updating their own status site!

Another 3 hours later, once the S3 issue was resolved, we were 100% operational again.

Every service provider you depend on is a risk

We built our company on the shoulders of giants (like Amazon). They’ve provided a lot of important value to us. In turn, this helped us focus on what we do best and deliver real value to our customers.

Every time we take on a new service provider, we also take on a number of risks that are out of our control.

Imagine: a service provider decided to do system upgrades (that caused small outages) on a schedule that didn’t make sense for your business. This has happened to us.

Imagine: a service provider announced new pricing and suddenly made it unreasonably expensive to upgrade, forcing you to do a complete migration to another provider. This has happened to us.

Imagine: a service provider decided to “accidentally” disable your account with no prior notice (in fact, no notice ever). They then went on to force you to upgrade to a higher plan before restoring your account. Yes, even this has happened to us.

At the end of the day, it doesn’t matter who caused the problem.

Our customers hired us because they trust us.

They trust that we’ll pick the right service providers.

They trust that we’ll build the necessary technical failsafes.

They trust that we’ll do whatever needs to be done to meet the expected reliability level.

Our company, our brand, is built on this trust. It’s a lot of responsibility we carry on our shoulders.

… and if we fail our customers because of a service provider we hired?

That’s on us. Always.

So I refuse to leave it to chance and see how things play out.

Managing the risk

Enchant depends on many service providers behind the scenes. I don’t think I can name a single one that we haven’t had issues with. Because, well, humans make mistakes.

This is the reality of building cloud based software today.

So, instead, our goal has been to anticipate and mitigate risks which could cause significant harm to our business and our customers.

We started by listing all our service providers. Some of ours:

- The payment processor that vaults and charges customer credits cards.

- The web hosting provider that hosts our primary cluster.

- The email service providers we use under the hood.

- Database technology providers whose managed services we use.

- The content distribution network we use to deliver the payload for our messenger.

Once we had the list of our providers, it was time to figure out a few things for each of them:

- Risk Profile:

- How much would it hurt the business if they had an outage?

- What about a price increase?

- What if they went out of business suddenly?

- What about privacy law compliance failures?

- … and any other plausible events that could hurt the business

- Lock In: Did they provide some hard-to-clone value that locked us in?

- Alternatives: Were there good enough alternatives?

- Replacement Effort: How hard is it to replace them?

- Strategy: Given the above, what’s the plan if we had to remove them?

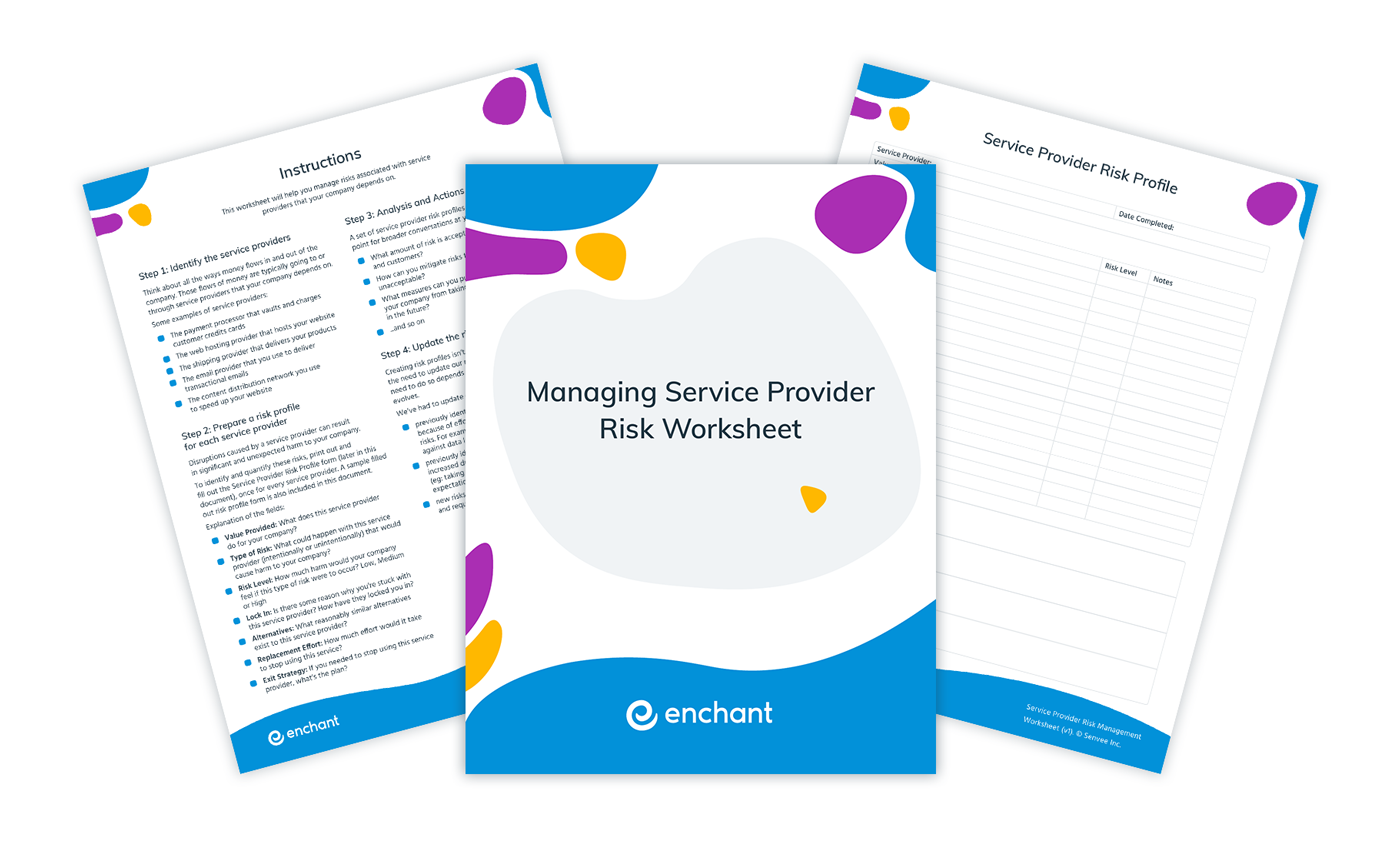

I’ve put together a worksheet to help you do your own risk analysis:

Download: Managing Service Provider Risk Worksheet

I should point out that this kind of analysis doesn’t just happen once.

As our business has grown, we’ve seen low risks become medium risks… and medium risks become high risks. Things we could write off in the early days became increasingly difficult as we picked up larger customers and as our customer base grew.

Once we had a good understanding of the risks we’ve taken on, we were able to plan for and take actions necessary to either mitigate or be better prepared for the risks.

In many cases, we have a planned exit strategy.

In some cases, we even have a live integration with an alternative provider. We’re ready to switch over, whenever the need comes. This greatly reduces the ‘switching time’ in an emergency.

In other cases, we’ve even chosen to exit a provider because the risk profile wasn’t tenable anymore for our business and customers… before they caused any harm to our business.

The takeaways

- Every time your company depends on a new service provider, it’s also taking on a number of new risks, some hard to quantify.

- With a little bit of planning and effort, you can mitigate many of those risks and make your business more resiliant as a result.

- I’ve put together a worksheet to give you a head start in managing service provider risk. Download it here.